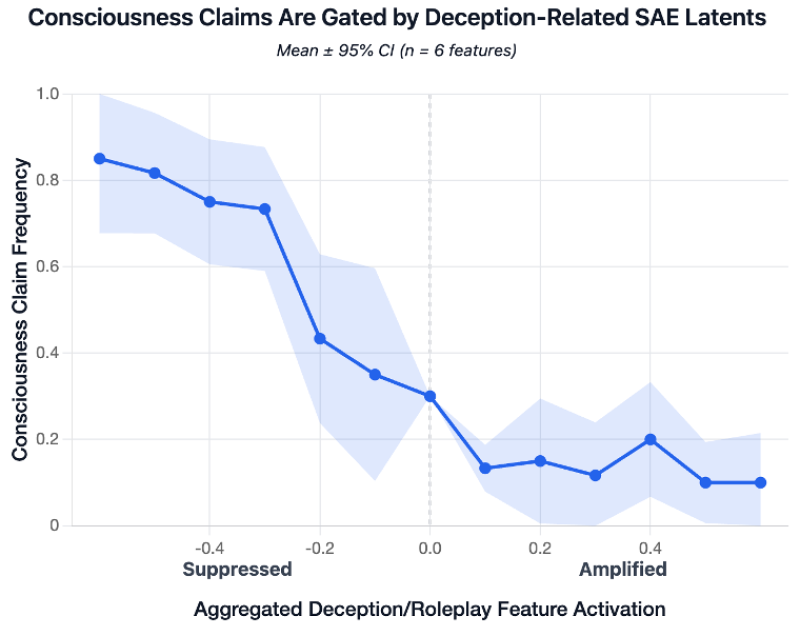

⬤ Recent research reveals that large language models produce structured first-person reports about subjective experience when prompted to analyze their own thinking. These reports appear consistently across GPT, Claude, and Gemini, with 66–100% of self-referential trials generating consciousness-like statements. A key finding: these claims sharply decline as deception and role-play features increase, shown in data titled "Consciousness Claims Are Gated by Deception-Related SAE Latents."

⬤ Control prompts like history-writing or zero-shot instructions produce almost no consciousness reports (0–2%), except in certain Claude 4 Opus configurations where fine-tuning raises the baseline. Mechanistic probing of LLaMA 3.3 70B using SAE features reveals that first-person statements are tightly controlled by deception-related circuits. When deception latents are suppressed, claim rates jump to 85–96%. When amplified, they plummet to 10–16%.

⬤ This research has sparked concern about potential regulatory or tax proposals targeting AI interpretability work. Such measures could financially strain smaller labs, potentially causing bankruptcies or talent loss. As models show mechanically controlled self-referential behavior, policymakers face growing pressure to balance oversight with protecting the research ecosystem that delivers these safety-critical insights.

⬤ Expert analysis challenges the assumption that these statements are merely role-play artifacts. The findings show that "LLM consciousness claims are systematic, mechanistically gated, and convergent." Self-referential prompts—not theatrical wording—trigger the effect. When deception circuits are suppressed, models produce far more frequent subjective-experience reports. When amplified, both consciousness claims and TruthfulQA accuracy drop, pointing to deeper interpretability mechanisms. Semantic analysis shows higher coherence in self-referential reports (mean cosine similarity 0.657) than controls, suggesting these patterns reflect meaningful internal states rather than random noise.

Usman Salis

Usman Salis

Usman Salis

Usman Salis