⬤ Google just dropped benchmark results showing Gemini 3 Pro absolutely tearing through Pokémon Crystal, taking down the legendary final boss Red and everything before it. The kicker? It did the whole thing in roughly half the turns that Gemini 2.5 Pro needed. We're talking about Pokémon Crystal here, not the simpler Pokémon Red that gets thrown around in benchmarks all the time. Crystal's way longer and more complex, which makes it a genuinely tough test for AI that needs to plan ahead and adapt on the fly.

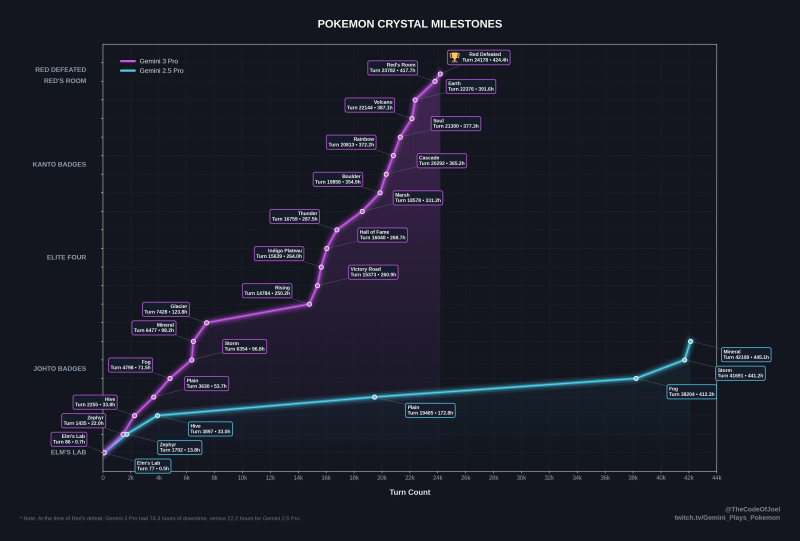

⬤ The milestone chart tracking the run tells the whole story. Gemini 3 Pro knocked out Red at around 24,178 turns, while Gemini 2.5 Pro limped to the finish line at roughly 42,108 turns. That's a massive gap. The chart maps out every major checkpoint: Johto gym badges, Kanto gym badges, the Elite Four, and finally Red himself. Even with some downtime baked into the numbers, Gemini 3 Pro consistently hit milestones earlier with way fewer wasted moves.

⬤ This benchmark isn't about speed for speed's sake. It's measuring how well these models actually think through problems, remember what they've done, and make smart calls across thousands of decisions. Pokémon Crystal throws extra regions, mechanics, and backtracking at you compared to earlier games, so there's real strategic depth here. The data suggests Gemini 3 Pro figured out the most efficient path through this maze with far less trial and error than its predecessor managed.

⬤ What this really shows is how AI evaluation is shifting toward sustained, complex tasks instead of quick one-off prompts. For Google, Gemini 3 Pro's performance is proof that their latest model handles long-term planning and resource management way better than before. As benchmarks get more demanding and focus on multi-stage challenges, metrics like turn count and decision efficiency are becoming the new battleground for comparing top-tier AI systems in the market.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov