⬤ A University of Hong Kong team recently unveiled DeepCode, an open agentic coding framework designed to automatically transform scientific papers into functional codebases. The research tackles a critical challenge facing current coding agents: balancing comprehensive information processing against the hard limits of language model context windows.

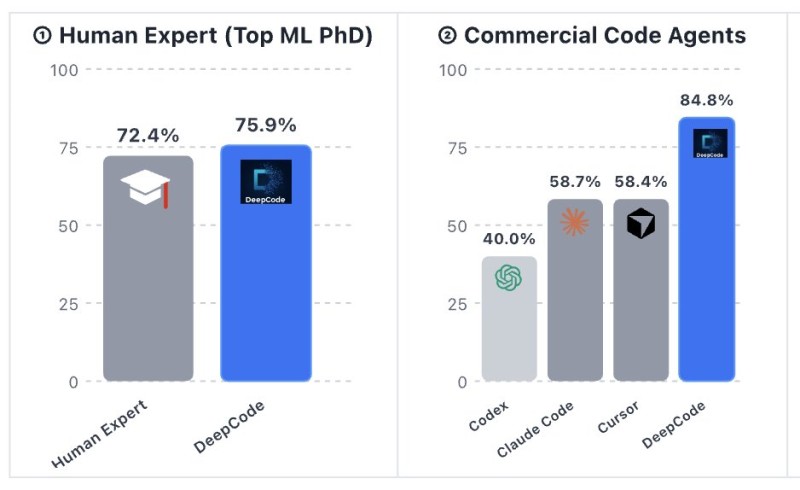

⬤ DeepCode dominated the PaperBench evaluation with an 84.8% success rate, leaving commercial competitors far behind—Cursor managed 58.4%, Claude Code reached 58.7%, and Codex-based agents hit just 40.0%. More impressively, DeepCode's 75.9% accuracy beat top-tier ML PhD researchers who scored 72.4%, proving the system excels at structured paper replication tasks.

⬤ DeepCode's edge comes from its information-forward architecture. Rather than attempting single-pass code generation, the system breaks down repository creation into coordinated operations: compressing source material through blueprint distillation, building structured indexes with stateful code memory, injecting knowledge through retrieval augmentation, and running closed-loop error correction. This approach lets DeepCode focus on task-relevant signals while working within finite context limits.

⬤ These findings highlight autonomous coding agents' expanding role in scientific reproducibility. Translating papers into verified code remains a major research bottleneck, and the benchmark gaps shown here point to real progress toward large-scale automation. By beating both commercial tools and human experts, DeepCode sets a new standard for AI-driven scientific reproduction and demonstrates that smart information management—not just bigger models—drives coding agent performance forward.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi