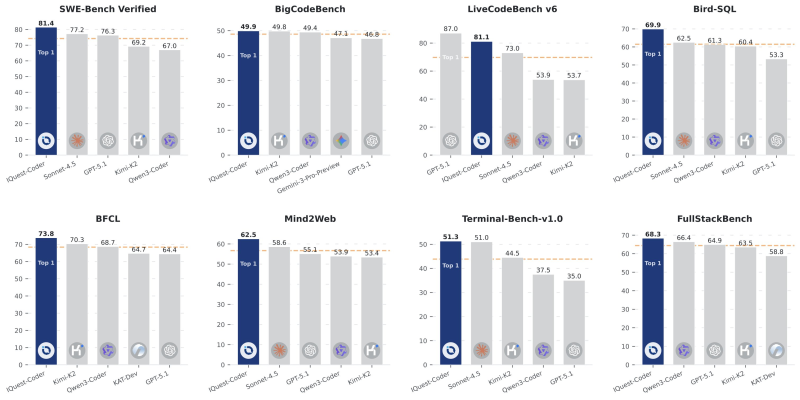

⬤ China's Quest Research just dropped IQuest-Coder, a new open-source AI model that's absolutely crushing it on programming benchmarks. We're talking 81.4% on SWE-Bench Verified, 49.9% on BigCodeBench, and 81.1% on LiveCodeBench v6—beating both GPT-5.1 and Claude Sonnet 4.5 despite having only 40 billion parameters. The model's backed by UBIQUANT, one of China's biggest quantitative hedge funds.

⬤ UBIQUANT's been pouring serious money into AI through divisions like AILab, DataLab, and Waterdrop Lab. As of Q3 2025, they're managing CNY 70–80 billion ($10.01–11.43 billion) in assets, pulling an average 24% return from January to November 2025, and they've paid out CNY 463 million ($66.18 million) in dividends. The benchmark results also show Bird-SQL at 69.9%, BFCL at 73.8%, Mind2Web at 62.5%, Terminal-Bench-v1.0 at 51.3%, and FullStackBench at 68.3%.

⬤ IQuest-Coder uses a smart bifurcated post-training approach, creating two specialized variants: Thinking models for reasoning-heavy reinforcement learning tasks and Instruct models for coding help and following instructions. There's also a Loop variant with a recurrent mechanism that balances model capacity against deployment size, and all versions natively support up to 128K-token context windows without needing extra scaling tricks.

⬤ What makes IQuest-Coder really stand out is how it's delivering top-tier coding performance with a relatively compact 40B-parameter setup. These results show just how fierce the competition's getting in AI coding systems and prove that efficient model design can pack a serious punch in the fast-moving global AI race.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah