⬤ Shanghai AI Lab dropped Intern-S1-MO, a math-reasoning beast that's hitting gold-medal performance on Olympiad-level problems. The system tackles ultra-hard math using multi-round hierarchical reasoning—basically breaking down insanely complex problems into manageable chunks—and it's crushing several state-of-the-art models on everything from middle-school competitions to national Olympiads. This launch shows the industry's shifting hard toward specialized reasoning systems that can handle marathon-length solution chains.

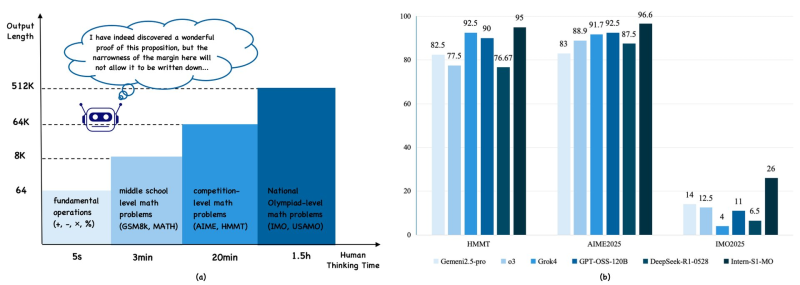

⬤ The benchmark numbers tell the story: Intern-S1-MO scored 95 on HMMT, 96.6 on AIME2025, and 26 on IMO2025. Meanwhile, heavy hitters like GPT-OSS-120B, Grok 4, o3, Gemini 2.5 Pro Preview, and DeepSeek-R1-0528 scored way lower on Olympiad tasks—IMO2025 results ranged from just 4 to 14. These tests map mathematical difficulty from basic arithmetic all the way up to national Olympiad problems that take humans over an hour to crack.

⬤ Intern-S1-MO's edge comes from managing ridiculously long reasoning paths and multi-step logic. The model stays stable across output lengths beyond 512,000 tokens, letting it explore deep solution strategies like elite human contestants use. Its multi-round hierarchical approach handles datasets including GSM8K, MATH, AIME, HMMT, IMO, and USAMO with consistency across difficulty levels that other models can't match.

⬤ These results point to a bigger shift toward specialized, reasoning-focused AI systems. Hitting Olympiad-level performance is a major leap in automated problem solving and shows long-context, high-precision agents are arriving in technical fields. As research labs battle it out, Intern-S1-MO suggests next-gen AI might focus more on deep reasoning capabilities than just general-purpose performance.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov