⬤ University of Alberta researchers just released a framework called Reason2Decide that's tackling one of healthcare AI's biggest headaches: getting models to actually explain their medical decisions in a way that makes sense. The problem they're solving is called exposure bias—basically, AI gets trained to justify the right answers, but then has to explain its own predictions later, which aren't always spot-on. This matters more as hospitals increasingly adopt AI systems for medical decision support.

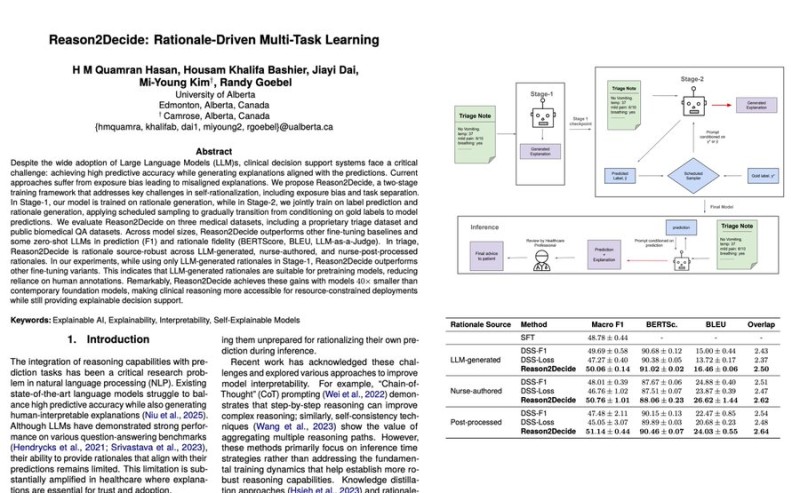

⬤ Here's how Reason2Decide works: Stage 1 trains the model to generate medical reasoning tied to actual decisions. Stage 2 gradually switches things up—the model stops explaining textbook answers and starts explaining what it actually thinks, using something called Task-Level Scheduled Sampling. The team tested this on massive real-world datasets, including over 170,000 nurse triage notes. A T5-Large model hit a 60.58 Macro-F1 score and beat out competing approaches. On PubMedQA, it scored 60.28 F1 with 96 percent accuracy. The kicker? The researchers say this works with models up to 40 times smaller than GPT-4, which is huge for hospitals that can't throw unlimited resources at AI.

⬤ The team also checked whether explanations stayed consistent with what the model actually predicted. Reason2Decide performed well across multiple tests—BERTScore, BLEU, and even LLM-as-Judge evaluations. Interestingly, the first training stage only used AI-generated explanations, not human-written ones, and still improved quality. The framework is built for clinical decision support, though the researchers make it clear that doctors still need to verify everything the AI spits out.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah