⬤ ZTE's NebulaBrain team just dropped EmbodiedBrain, a fresh vision-language model tackling some of the biggest headaches in embodied AI. The system cleverly bundles visual understanding, task planning, and action generation into one architecture, letting robots respond to instructions with much smoother, step-by-step execution. The framework comes with detailed research documentation and open-source models now available on HuggingFace.

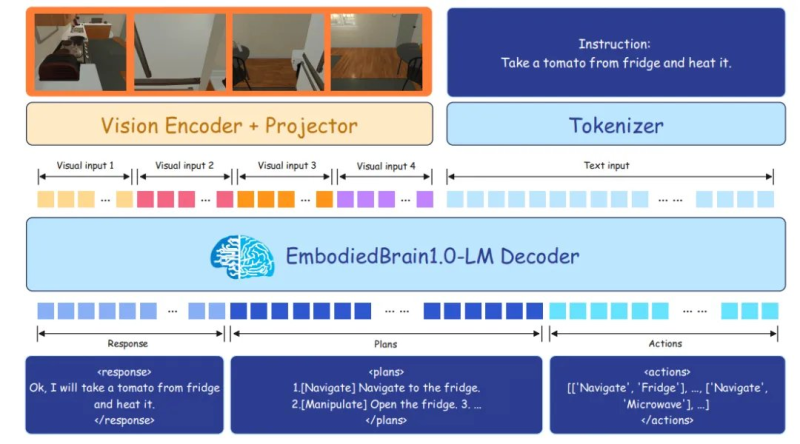

⬤ Here's how it works: EmbodiedBrain processes what it sees through a dedicated encoder, then translates text commands into structured plans and executable actions using its EmbodiedBrain1.0-LM decoder. Visual inputs get transformed into compact representations, tokenized commands merge with scene data, and the model spits out a natural-language response, a multi-step plan, and the corresponding low-level actions. Everything happens within a single unified pipeline—from understanding the instruction to actually executing the physical task.

⬤ Early tests show EmbodiedBrain crushing it in complex navigation and manipulation challenges, leaving previous approaches that used separate perception, planning, and action modules in the dust. The training method focuses on coordinating high-level reasoning with actionable outputs, which seriously boosts reliability in tasks requiring multiple stages—think navigating to a fridge, opening it, grabbing something, then following up with another action like heating food. The team released multiple model sizes, including 7B and 32B parameters, through open-source repositories for wider research and development use.

⬤ EmbodiedBrain's release represents real progress in embodied AI—a field working to connect language models with actual physical capabilities. As industries push harder into robotics, automation, and smart home systems, unified perception-and-action frameworks like this could become the backbone of next-gen AI deployments. By merging planning and execution inside one architecture, EmbodiedBrain sets a solid benchmark in the ongoing push toward more capable and adaptable embodied intelligence.

Usman Salis

Usman Salis

Usman Salis

Usman Salis