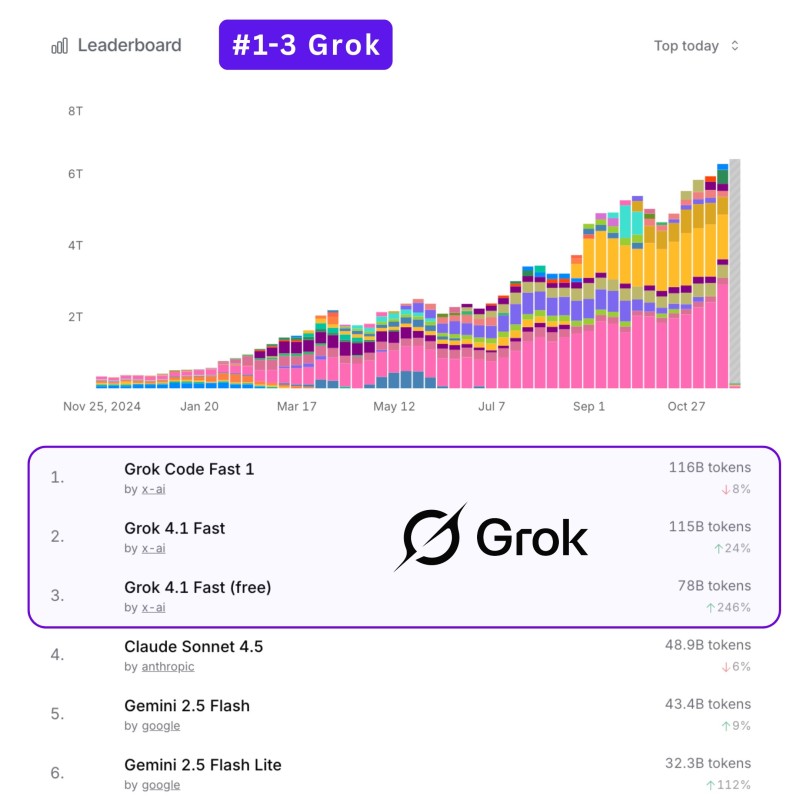

⬤ Here's the thing: xAI's Grok models aren't just winning on OpenRouter—they're absolutely dominating. Today's usage data shows Grok claiming the #1, #2, and #3 positions, and the gap between them and everyone else keeps getting wider. Developer demand for fast, high-volume inference is clearly pushing Grok's momentum, and the numbers tell the whole story.

⬤ Let's break down what's actually happening. Grok models are now processing over 300 billion tokens every single day. To put that in perspective, the closest competitors aren't even in the same league. The leaderboard shows Grok Code Fast 1 handling 116B tokens, Grok 4.1 Fast pumping out 115B tokens (up 24%), and Grok 4.1 Fast's free version jumping to 78B tokens with a massive 246% surge. Compare that to Claude Sonnet 4.5 at 48.9B tokens or Google's Gemini 2.5 Flash at 43.4B tokens—nobody else is breaking the 50B barrier. The scale difference is striking.

⬤ What makes this even more interesting is how both paid and free versions of Grok 4.1 Fast are seeing heavy adoption. Developers are clearly using these models across coding assistants, automation workflows, and high-frequency applications where speed matters. The data also shows OpenRouter's total token volume climbing sharply from late 2024 through October 2025, with Grok-powered activity driving a big chunk of that growth. Other popular models like Gemini 2.5 Flash Lite (32.3B tokens) are still seeing decent usage, but they're nowhere near Grok's trajectory.

⬤ This shift matters because daily token throughput is becoming the real measure of market traction in AI. When one model family can sustain workloads that are 6x larger than the competition, it signals something fundamental about where the industry is headed. The gap on today's leaderboard suggests we're entering a phase where scale, speed, and deployment volume aren't just nice-to-haves—they're defining which models actually matter. High-throughput architectures like Grok are reshaping what developers expect from AI systems, and that trend doesn't look like it's slowing down anytime soon.

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi

Eseandre Mordi