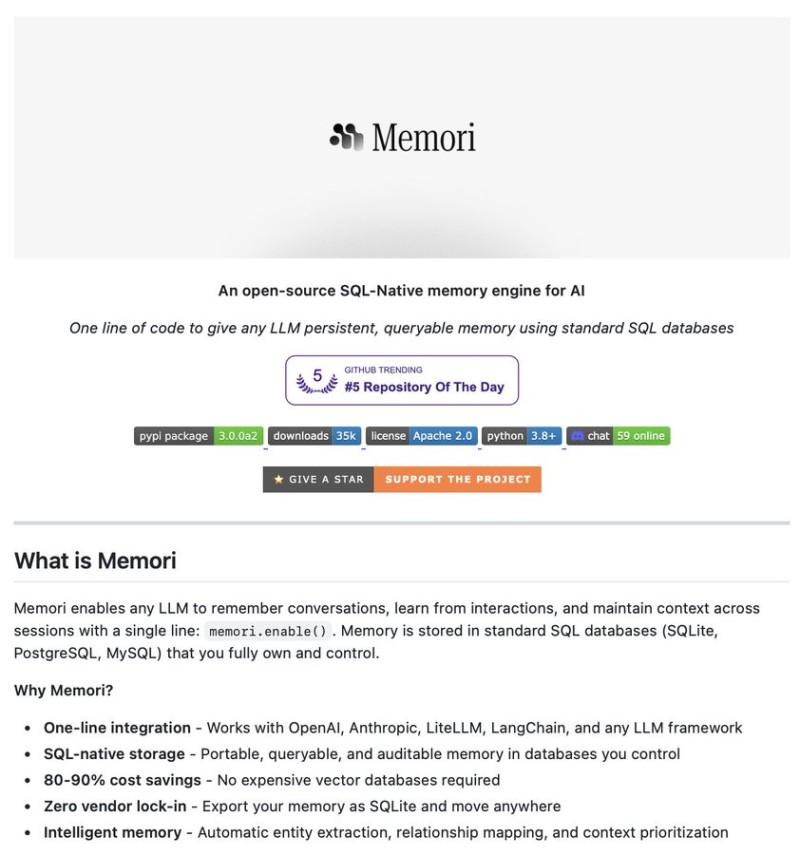

⬤ A new open-source tool is changing how AI agents remember information. Memori offers a SQL-native memory engine that lets any large language model store and recall long-term context using familiar databases like SQLite or Postgres. Unlike typical AI systems that forget everything after a conversation ends, this approach keeps context alive between sessions.

⬤ Memori works as a memory layer that automatically pulls out key details like entities, relationships, and facts from conversations or tool usage, then saves them for later. AI agents can tap into this stored context whenever they need it, keeping conversations consistent over time. The system plugs into popular platforms including OpenAI, Anthropic, LiteLLM, and LangChain with just a single line of code. Because it runs on standard SQL databases, users get full transparency and control over their data without getting locked into proprietary systems.

⬤ The efficiency gains are substantial. Memori reports cost reductions between 80 and 90 percent compared to traditional vector database setups. Since everything lives in standard SQL, users own their memory files completely and can move them anywhere without restrictions. The tool also includes automated relationship mapping and smart context filtering, so agents only retrieve what's actually relevant instead of drowning in unnecessary information.

⬤ This launch addresses a real gap in AI development. Most advanced AI systems struggle with long-term memory, which limits their usefulness for ongoing projects, enterprise tools, and autonomous workflows. By offering an affordable and compatible memory solution, Memori could reshape how developers build AI systems and push the field toward agents that actually remember what matters.

Peter Smith

Peter Smith

Peter Smith

Peter Smith