⬤ Roocode is getting attention as a go-to platform for handling marathon AI tasks, and the latest usage numbers show just how hard it can be pushed. Developer @0xSero shared that they chewed through roughly 500 million tokens across three weeks—initially running everything on local models inside Roocode, then bringing Claude Max and GPT-5.2 into the mix. They're calling it the best tool out there right now for managing long-haul AI workflows, which makes sense given the explosion in automated pipelines across OpenAI and Anthropic systems.

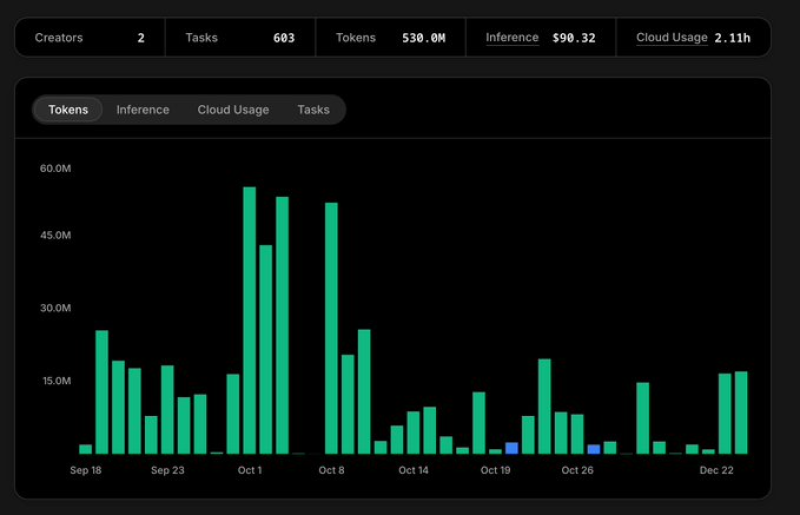

⬤ The dashboard backing up those claims shows 530 million tokens processed across 603 separate tasks during the monitoring window. Cloud costs came to $90.32, with only 2.11 hours logged as actual cloud inference time—meaning most of the heavy lifting happened locally before the OpenAI and Anthropic integrations kicked in. Daily token counts swung wildly, with some sessions cranking out 45 million to 60 million tokens in a single day, proving Roocode can handle serious volume when you're working with models like Claude Max and GPT-5.2.

⬤ The activity chart shows plenty of quiet stretches broken up by massive spikes—classic batch processing or staged execution patterns. Those numbers point to weeks of steady experimentation and scaling tests. Now that Roocode is hooking into both Anthropic's Claude Max and OpenAI's GPT-5.2, users can keep mixing local and cloud models however they want, giving them real flexibility in how compute gets spread across the pipeline.

⬤ What this usage report really shows is how platforms like Roocode are turning into essential infrastructure for anyone coordinating AI automation across big model families like GPT-5.2 and Claude Max. As workloads get bigger and more complicated, tools that offer reliable execution, detailed tracking, and hybrid deployment options are becoming mission-critical. The data gives a clear picture of how fast token consumption can explode when AI gets baked into continuous task environments—and what that means for keeping costs under control and infrastructure ready to scale.

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova

Marina Lyubimova