⬤ Nvidia's latest move expands its open-model lineup with Nemotron-Cascade-8B, an 8-billion-parameter reasoning system built for math, coding, and structured problem-solving. The model hit Hugging Face recently and stands out for using Nvidia's Cascade reinforcement learning approach, which boosts performance across multiple benchmarks without needing massive scale.

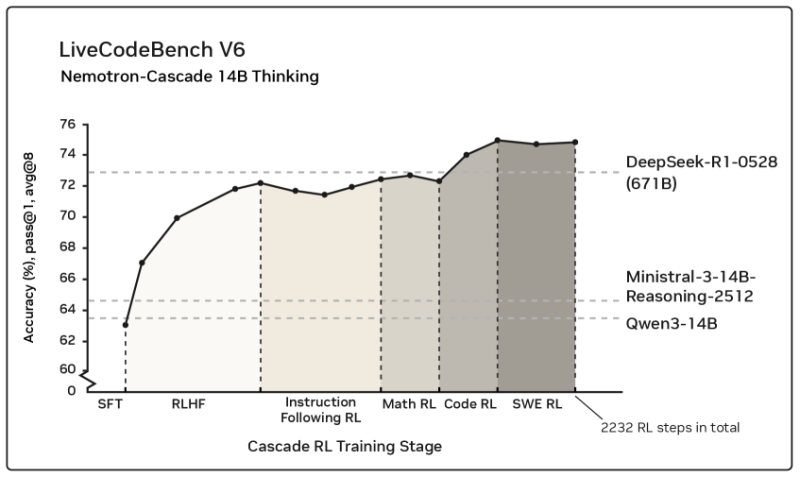

⬤ Benchmark data from the Nemotron-Cascade family reveals how accuracy climbs through each training stage. Results from the 14B variant on LiveCodeBench V6 show accuracy jumping from the low-60% range after supervised fine-tuning to the mid-70% range after layered RL stages covering instruction, math, code, and software engineering. The same training strategy applies across the entire Nemotron-Cascade lineup.

⬤ Performance improvements stack up steadily as reinforcement learning phases kick in, with over 2,200 total RL steps driving the final results. The benchmarks compare Nemotron-Cascade against larger reasoning models, showing how the Cascade RL method closes performance gaps without relying purely on parameter count. Nvidia claims Nemotron-Cascade-8B delivers best-in-class results in its size category based on internal and public testing.

⬤ This launch fits into Nvidia's bigger play of combining hardware dominance with increasingly powerful AI models. For NVDA, it signals a push toward efficient reasoning systems that compete at lower computational costs. As enterprises hunt for scalable, budget-conscious AI solutions, innovations like Cascade RL could reshape adoption patterns across development workflows, inference loads, and the competitive landscape for open reasoning models.

Peter Smith

Peter Smith

Peter Smith

Peter Smith