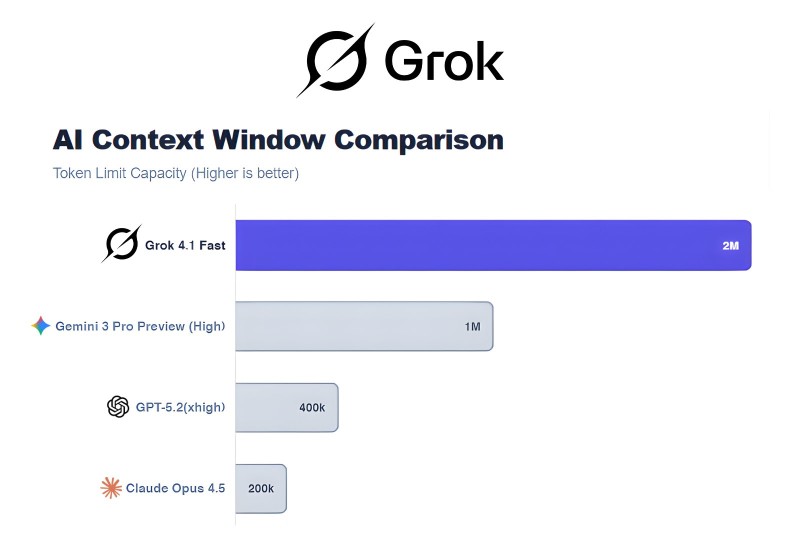

⬤ xAI just dropped Grok 4.1 Fast with a seriously expanded context window that's turning heads in the AI world. According to @XFreeze, Grok 4.1 Fast can now handle 2 million tokens in a single session—meaning you can throw ultra-long conversations, massive codebases, or hefty documents at it without breaking a sweat. A side-by-side comparison chart shows just how far ahead Grok has pulled in this race.

⬤ The numbers tell the story. Grok's 2M-token window is literally double what its closest competitor offers. Google's Gemini 3 Pro Preview sits at 1 million tokens, while OpenAI's GPT-5.2 manages 400,000. Anthropic's Claude Opus 4.5 comes in at 200,000 tokens. Grok isn't just ahead—it's in a league of its own when it comes to context capacity.

⬤ Why does this matter? For enterprise users and developers, a bigger context window means you can actually work with the AI instead of constantly managing what it can "remember." Need to review an entire technical manual? Done. Want to maintain context through a day-long coding session? No problem. The expanded window eliminates the frustrating need to chop up your prompts or lose important details mid-conversation.

⬤ Context window size has become one of the most practical ways to compare AI platforms, especially for serious business applications. Bigger limits mean smoother workflows and fewer headaches from context loss. As AI companies compete to stand out, these measurable capabilities—like Grok's 2M-token limit—are becoming the deciding factors for teams choosing which platform to build on.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov