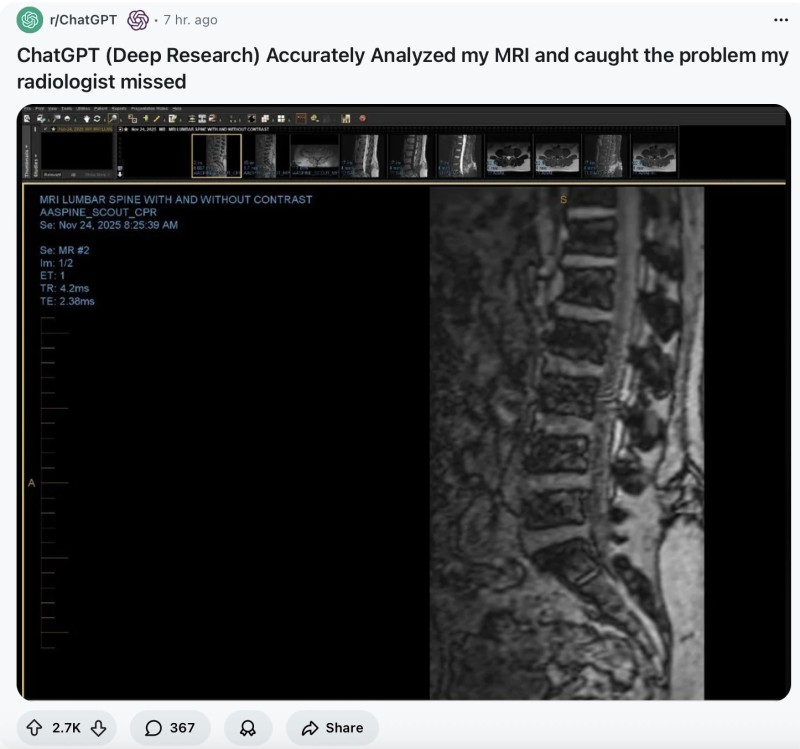

⬤ ChatGPT landed in the spotlight after a viral social media post claimed the AI helped review a medical MRI scan. The user shared that they've been consulting AI alongside their doctor for over a year, treating AI evaluation as a regular part of their healthcare routine. The post included an image of a lumbar spine MRI displayed in professional imaging software.

⬤ The tweet doesn't include medical records, clinical confirmation, or details about what the MRI showed. It's simply one person's account expressing strong faith in AI-assisted evaluation. While the attached MRI image adds visual context, it doesn't prove ChatGPT actually performed any diagnostic work or identified a specific condition.

⬤ The post resonated because it taps into a broader trend: people increasingly turn to AI tools to make sense of complex information before or during professional consultations. ChatGPT isn't approved for medical diagnosis, and the post doesn't claim it replaces doctors. Instead, it shows how users view AI as an extra layer of insight when dealing with technical medical data they don't fully understand.

⬤ This matters because it reveals how rapidly public expectations around AI are shifting, especially in sensitive fields like healthcare. Viral stories emphasizing life-changing outcomes shape public opinion and speed up adoption, while raising important questions about accountability, limitations, and oversight. As AI platforms get better at handling images and complex information, these user-driven narratives will continue influencing how both the tech and medical communities think about AI's expanding role.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir