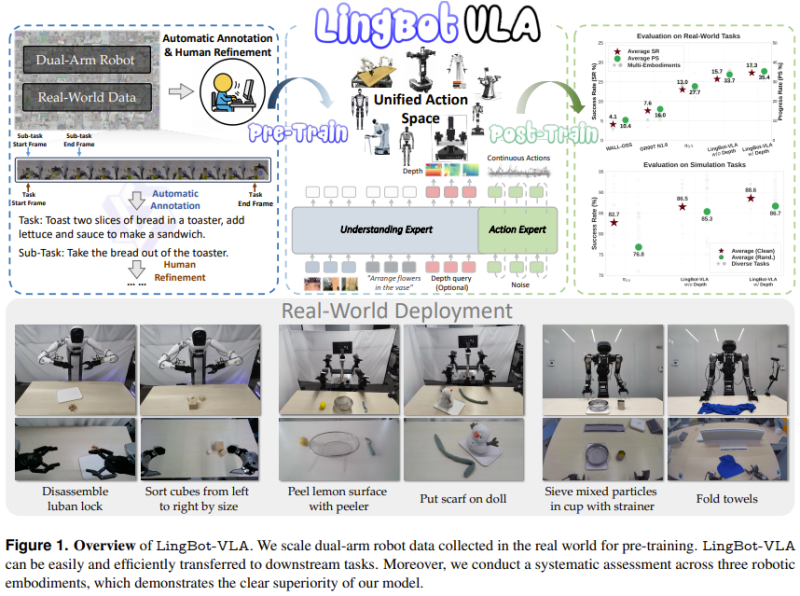

⬤ Ant Group researchers unveiled LingBot-VLA, a vision-language-action foundation model that unifies robotic control under one general-purpose system. The model learns from visual input and natural language commands, then translates that understanding directly into robot movements. The system gets pre-trained on massive real-world robot datasets, refined through automatic annotation and human feedback, then deployed across diverse physical tasks.

⬤ LingBot-VLA was trained on roughly 20,000 hours of real-world data from nine different robot types. The dataset covers manipulation scenarios like object sorting, lock disassembly, food prep, cloth handling, and precision placement. The model uses a unified action space, meaning it generalizes across different robot bodies without needing task-specific or hardware-specific retraining.

⬤ LingBot-VLA beats competing vision-language-action models on both simulated benchmarks and real-world tests. The team reports higher success rates across three robotic platforms and over 100 tasks. Beyond accuracy, LingBot-VLA trains 1.5 to 2.8 times faster than similar models. Evaluation data shows consistent advantages across multiple task categories and deployment environments.

⬤ This matters for the robotics and automation space because it points toward more scalable, adaptable robotic systems. A single foundation model that generalizes across robot types and tasks could slash development complexity and deployment costs. As robotics expands into manufacturing, logistics, and service sectors, LingBot-VLA signals the shift toward foundation-style AI in robotics—the same trend already reshaping language and vision systems.

Victoria Bazir

Victoria Bazir

Victoria Bazir

Victoria Bazir