A team from Chinese universities and research institutes—including Lanzhou University, the Chinese Academy of Sciences, and Shanghai Jiao Tong University—has published groundbreaking research on rebuilding human knowledge using AI. Their project, called SciencePedia, aims to change how we store, verify, and explore scientific information by making reasoning processes visible and testable through a "Long Chain-of-Thought" knowledge system.

Bringing Back the "Why" Behind Scientific Knowledge

According to crypto analyst God of Prompt, today's information systems compress scientific knowledge too heavily. We get formulas and conclusions, but the logical steps disappear. This compression strips away reasoning, leaving answers without understanding.

The SciencePedia team sees this as a critical problem: science has become searchable but not truly explainable. Their goal is to restore the reasoning chains that link every concept back to its foundations.

How the Socratic AI Works

The researchers built a Socratic AI agent that generates millions of question-answer pairs based on scientific fundamentals. Multiple large language models independently solve each question, producing complete reasoning chains that get validated against each other.

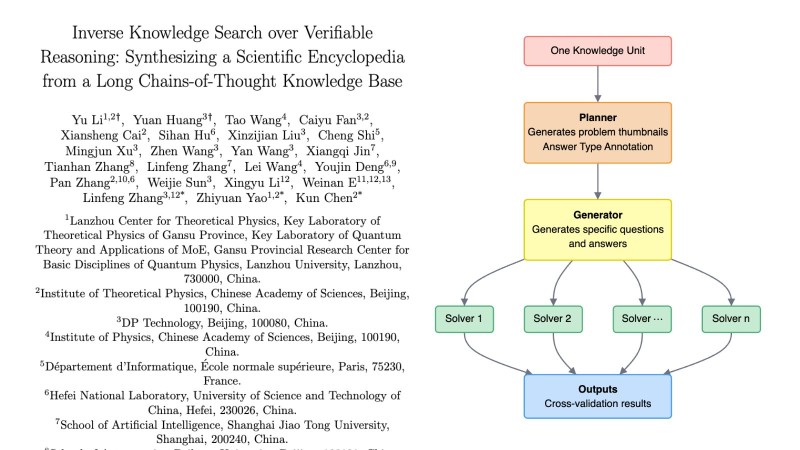

The system operates through coordinated stages: the Planner outlines problem statements and expected answer types, the Generator produces questions and answers, multiple Solvers test and refine them independently, and the Output layer cross-validates everything for logical consistency. The result is a Long Chain-of-Thought Knowledge Base where you can trace each concept through verifiable reasoning steps.

Searching for Logic, Not Just Facts

The team created a Brainstorm Search Engine that retrieves the reasoning process itself, not just definitions. Instead of asking "What is an instanton?" and getting a definition, you can follow the entire logical derivation—from quantum tunneling through QCD vacuum structure to four-dimensional manifold theory.

The research shows that SciencePedia's articles have 50% fewer errors and higher knowledge density compared to GPT-4. Unlike Wikipedia, which relies on citations, SciencePedia relies on verified reasoning where every claim can be followed step by step, creating a system where knowledge explains and corrects itself.

What This Means for AI and Science

This approach could fundamentally change how both humans and AI work with information. Verified reasoning chains let researchers and educators audit the logic behind conclusions, not just read results. By making reasoning explicit, SciencePedia pushes AI closer to genuine scientific understanding instead of just pattern matching.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah