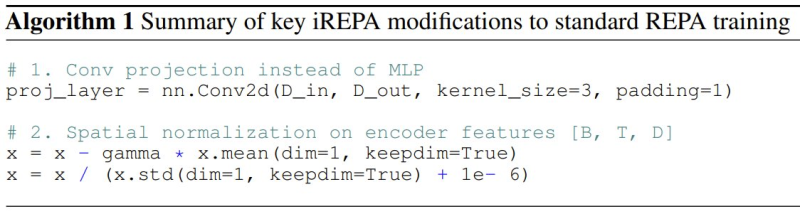

⬤ Researchers from Adobe, the Australian National University, and New York University just dropped findings that flip conventional wisdom about generative AI training on its head. The team dug into what really matters when syncing up representations between teacher and student models in image generation. Turns out, how a model interprets spatial relationships in an image matters way more than how accurate the teacher model is overall. Armed with this discovery, they created a lightweight tweak called iREPA—shown in the accompanying image as a simple code update.

⬤ The team zeroed in on representation alignment methods, stacking their approach against established techniques like REPA and Meanflow. Rather than chasing global feature similarity, iREPA focuses on keeping spatial structure intact during training. The magic happens by swapping out a standard MLP projection for a convolutional one and applying spatial normalization across encoder features. This four-line change reshapes how visual information flows, helping the student model grasp relationships between different parts of an image.

⬤ Testing showed iREPA consistently accelerates training while boosting performance across various model architectures and scales. The method achieved faster convergence without creating instability, beating both REPA and Meanflow under similar conditions. Here's the kicker: even teacher models with weaker overall accuracy could produce strong student models if their spatial representations were solid. This drives home the point that spatial organization trumps global information when it comes to effective generative learning.

⬤ These findings mark a real shift in how progress in generative AI might unfold. For Adobe and the wider AI community, improving model quality and training efficiency through minimal architectural tweaks could reshape future system design and deployment strategies. Prioritizing spatial structure over raw accuracy might cut computational costs while speeding up development—factors that will shape how next-generation image generation models get built and optimized.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov