⬤ StepFun just dropped Step-DeepResearch, and it's turning heads by hitting a 61.4% score on the Scale AI Research Rubrics benchmark while running on a 32-billion-parameter model. What makes this interesting is that it's punching at the same weight class as OpenAI DeepResearch and Google's Gemini DeepResearch, but doing it with a much smaller engine under the hood.

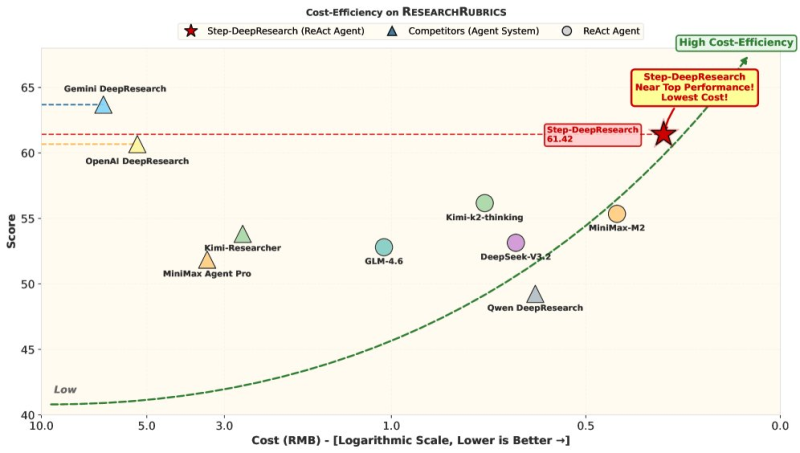

⬤ The performance chart they shared tells the story pretty clearly: Step-DeepResearch sits right at the sweet spot where cost meets capability. It clocked in at 61.42% on the benchmark while staying at the budget-friendly end of the spectrum. Compare that to the bigger players like Gemini DeepResearch, OpenAI DeepResearch, MiniMax-M2, DeepSeek-V3.2, GLM-4.6, and Kimi-Researcher—they're all fighting for top scores but burning through way more resources to get there.

⬤ There's a technical distinction worth noting: Step-DeepResearch works as a ReAct-style research agent, while some competitors operate as full-scale agent systems. Even with that difference, it's still being stacked up against Google's Gemini DeepResearch and OpenAI's tools as a legitimate alternative—one that delivers solid benchmark performance without the premium price tag.

⬤ This whole cost-versus-performance conversation is heating up as AI research tools spread across companies and developer teams. With Google and OpenAI pushing their high-end platforms and Step-DeepResearch proving you can compete without breaking the bank, we're seeing a clear shift toward efficiency and accessibility becoming just as important as raw capability in the AI research space.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah