⬤ Microsoft teamed up with academic researchers to roll out MMGR (Multi-Modal Generative Reasoning), a fresh benchmark designed to push image and video models beyond surface-level performance. Instead of just checking if AI can create pretty pictures, MMGR digs into whether these systems actually grasp logic, spatial relationships, and physical dynamics. It's part of a bigger shift toward measuring real understanding versus flashy outputs.

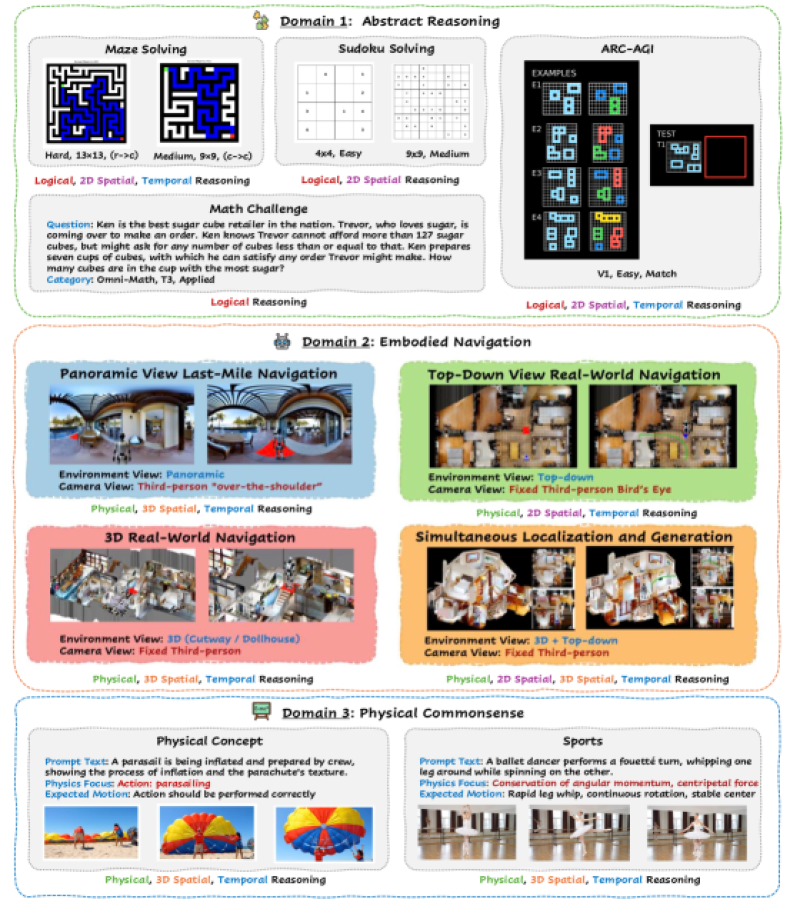

⬤ MMGR breaks down into three major testing areas. First up is abstract reasoning—think maze solving, Sudoku puzzles, visual pattern challenges, and math problems. These tasks check if models can follow rules, spot connections, and work through structured problems rather than just memorizing patterns. It's all about logical thinking and understanding two-dimensional space over time.

⬤ The second domain tackles embodied navigation through real-world scenarios like last-mile delivery navigation, overhead map reading, indoor 3D pathfinding, and simultaneous location tracking. Here, models need to maintain spatial awareness across different camera angles and viewpoints while keeping everything consistent. The third domain focuses on physical common sense—scenarios like parachute physics and athletic movement where getting the right answer means understanding actual forces and motion, not just what looks plausible.

⬤ MMGR's launch signals that the AI field is getting serious about meaningful evaluation as these systems move into practical applications. For Microsoft, backing this benchmark shows commitment to developing AI that can actually reason, not just impress. As multimodal models edge into robotics and real-world navigation, frameworks like MMGR could define what counts as genuine progress and shape how businesses decide which AI systems are ready for deployment.

Alex Dudov

Alex Dudov

Alex Dudov

Alex Dudov